Feature Views

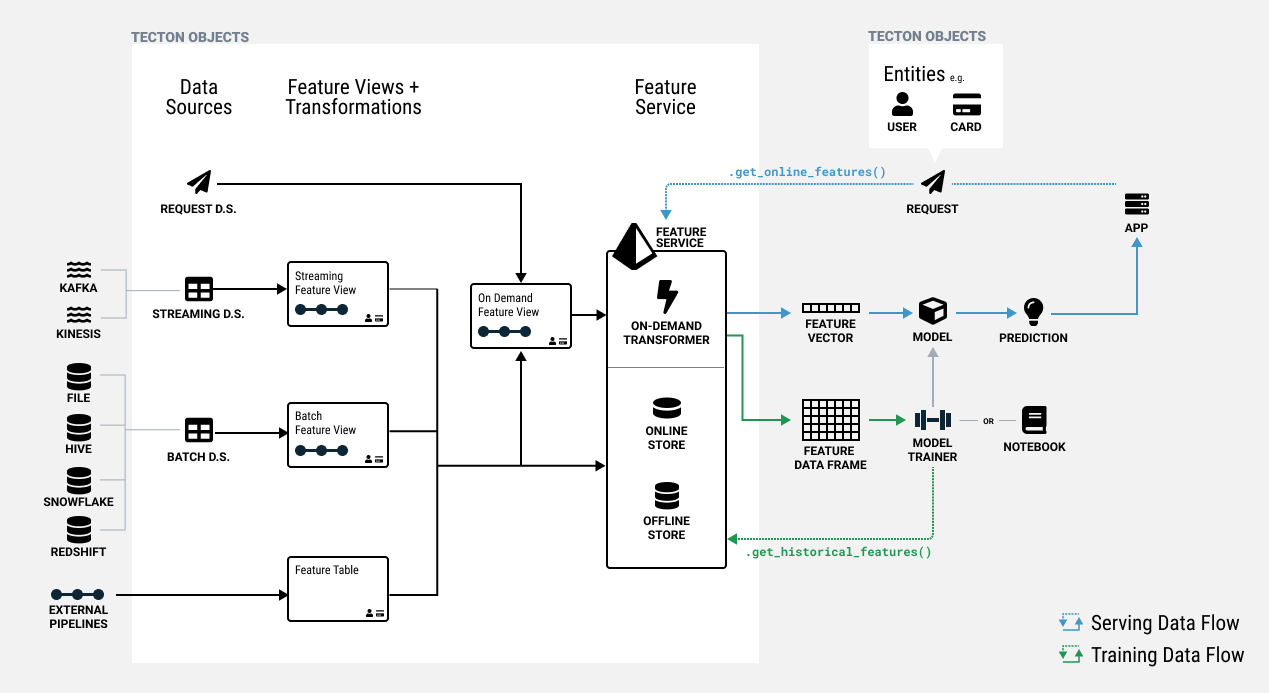

In Tecton, features are defined as a view on registered Data Sources or other Feature Views. Feature Views are the core abstraction that enables:

- Using one feature definition for both training and serving.

- Reusing features across models.

- Managing feature lineage and versioning.

- Orchestrating compute and storage of features.

A Feature View contains all information required to manage one or more related features, including:

- Pipeline: A transformation pipeline that takes in one or more sources and runs transformations to compute features. Sources can be Tecton Data Sources or in some cases, other Feature Views.

- Entities: The common objects that the features are attributes of such as Customer or Product. The entities dictate the join keys for the Feature View.

- Configuration: Materialization configuration for defining the orchestration and serving of features, as well as monitoring configuration.

- Metadata: Optional metadata about the features used for organization and discovery. This can include things like descriptions and tags.

The pipeline and entities for a Feature View define the semantics of what the feature values truly represent. Changes to a Feature View's pipelines or entities are therefore considered destructive and will result in the rematerialization of feature values.

Concept: Feature Views in a Feature Store

Types of Feature Views

There are 3 types of Feature Views:

- Batch Feature Views run transformations on one or more Batch Sources and can materialize feature data to the Online and/or Offline Feature Store on a schedule.

- Stream Feature Views transform features in near-real-time against a Stream Source and can materialize data to the Online and Offline Feature Store.

- On-Demand Feature Views run transformations at request time based on data from a Request Source, Batch Feature View, or Stream Feature View.

| Name | Source types | Transformation types |

|---|---|---|

| Batch Feature View | Batch Data Source | pyspark, spark_sql, snowpark, snowflake_sql |

| Stream Feature View | Stream Data Source | pyspark, spark_sql |

| On-Demand Feature View | Request Data Source, Batch Feature View, Stream Feature View | python, pandas |

Defining a Feature View

A Feature View is defined using an decorator over a function that represents a pipeline of Transformations.

Below, we'll describe the high-level components of defining a Feature View. See the individual Feature View type sections for more details and examples.

# Feature View type

@batch_feature_view(

# Pipeline attributes

sources=[source],

mode="<mode>",

# Entities

entities=[entity],

# Materialization and serving configuration

online=True,

offline=False,

batch_schedule=timedelta,

feature_start_time=datetime,

ttl=timedelta,

# Metadata

owner="<owner>",

description="<description>",

tags={},

)

# Feature View name

def my_feature_view(input_data):

intermediate_data = my_transformation(input_data)

output_data = my_transformation_two(intermediate_data)

return output_data

See the API reference for the specific parameters available for each type of Feature View.

Registering a Feature View

Feature Views are registered by adding a decorator (e.g. @batch_feature_view)

to a Python function. The decorator supports several parameters to configure the

Feature View.

The default name of the Feature View registered with Tecton will be the name of

the function. If needed, the name can be explicitly set using the name

decorator parameter.

The function inputs are retrieved from the specified sources in corresponding

order. Tecton will use the function pipeline definition to construct, register,

and execute the specified graph of transformations.

Defining the Feature View Function

A Feature View contains one function that is invoked when the Feature View runs. The function specifies transformation logic to run against data retrieved from external data sources. Alternatively, the function can call (an)other function(s) external to the Feature View which contain(s) the transformation logic. For details, see the Transformations section.

Interacting with Feature Views

Once you have applied your Feature View to the Feature Store, the Tecton SDK provides a set of methods that allow you to access a feature in your Notebook. Here are a few examples of common actions.

Retrieving a Feature View Object

First, you'll need to get the feature view with the registered name.

ws = tecton.get_workspace("prod")

feature_view = ws.get_feature_view("user_ad_impression_counts")

Running Feature View Transformation Pipeline

You can dry-run the feature view transformation pipeline from the notebook for all types of feature view.

result_dataframe = feature_view.run()

display(result_dataframe.to_pandas())

See the API reference for the specific parameters available for each type of Feature View.

For a Stream Feature View, you can also run the streaming job. This will write to a temporary table which can be queried

feature_view.run_stream(output_temp_table="temp_table") # start streaming job

display(spark.sql("SELECT * FROM temp_table LIMIT 5")) # Query the output table

Reading Feature View Data

Reading a sample of feature values can help validate that you've implemented it correctly, or understand the data structure when exploring a feature you're unfamiliar with.

For Batch and Stream features you can use the

FeatureView.get_historical_features() method to view some output from your new

feature. To help your query run faster, you can use the start_time and

end_time parameters to select a subset of dates, or pass an entities

DataFrame of keys to view results for just those entity keys.

By default, get_historical_features will always retrieve data from the Offline

Feature Store, but you can bypass the offline store and run transformations on

the fly using the parameter from_source=True.

from datetime import datetime, timedelta

start_time = datetime.today() - timedelta(days=2)

results = feature_view.get_historical_features(start_time=start_time)

display(results)

Because On-Demand Feature Views depend on request data and or other Batch and

Stream Feature Views, they cannot simply be looked up from the Feature Store

using get_historical_features() without the proper input data. Refer to

On-Demand Feature Views for

more details on how to test and preview these Feature Views.